Wow! January just flew by in the whirl of new semester business. So of course my first post of 2015 is actually going to be a final report on the digital humanities class project from last semester.

Here is the link to the google map the course made: https://www.google.com/maps/d/edit?mid=zDX3AIg56Xx0.kx2jSWf18o-A

- There are many more blue pins than red only because I limited the number of built works by female architects for each group to 15 (and for comparison the textbook had anywhere from 7-24 built works per section pinned by an individual in the first layer). Wanted them to do slightly deeper research on the women architects rather than broader. Although students did say that it was in some cases challenging to find 15 built works where they could also find at least 1 image and any useful data about the built work (including, sometimes a challenge, where it was located if it had since been destroyed).

- Their work on the first part of the project ranged all over the place from A+ to C (I almost never get failing students because of UNC’s generous add/drop deadline) and this was still fairly obvious from looking at their work on the map, and much of a piece with their other grades in the course. Their work on the second part of the project ranged from A+ to B+, which was a good sign that they had improved since their first try run at it.

- I was surprised at how much I learned from their work on the second part of the project, which brought to light, among other things, that a woman received the first patent for an architectural design (in North Carolina, no less!) and highlighted the neglected work of the first licensed African-American female architect Norma Merrick Sklarek (whose firm wouldn’t ever tell clients that she was the principal designer for fear they would bolt from the project). The students really brought a higher level of engagement to this project than I am used to seeing in previous incarnations of the course.

- I also took all of their data from the second part of the project and used it to create a google fusion table (found here), to which I added a field for building type (school, museum, office building, etc.) and showed them various ways they could filter the data. One of the charts I had created was a network of building type by year, which revealed that a lot of early women architects built houses and many more current female architects build office buildings—this raised questions for the class and led to an interesting discussion of late 19th female architects finding more commissions for houses precisely because of sesxist stereotypes about the association of women with the domestic realm (they would just be better at houses, because this is where they spend all of their time and provide the most socially appropriate value as wives and mothers) but also how this distribution might reflect the students’ own assumptions and biases in doing their research and choosing which 15 among potentially many other choices to add to the map for a given date range.

- The good: Students were asked after the final presentations to give feedback on the project as a whole. Some found the project fun (r.e. the complaint in number 6 below, one student gave the rejoinder “I don’t care, I really liked it, it was fun”), others found it made the built works much more memorable and helped them better understand how to do formal analysis of architecture, others really liked the interactivity of the exercise (being able to add video and images to the pins as well as links, and to then go explore what their peers had created, and move around the map geographically), and others found it made them feel a sense of ownership over the materials they were pinning to the map. They were unanimously in favor of sharing their work outside the class and a couple of students wanted to know if the project would continue to grow after this. One student used his newly acquired mapping skills in an Islamic art class to map artistic commissions by one particular Andalusian caliph.

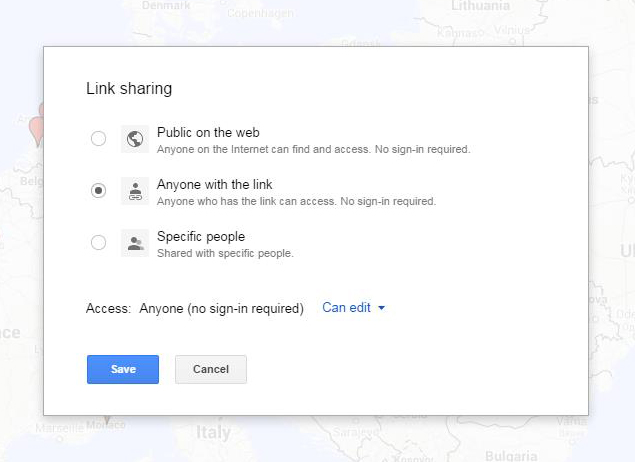

- The bad but fixable: One student complained about the length of time it took to actually make the pins on the map (6 or 7 hours) and so we talked as a group about how to improve that aspect of the project. Most students would have preferred to just put their data and links into a google drive shared spreadsheet and then let me “make” the map with that. Based on how google maps ingests data from a spreadsheet, from a test with some of the female architect data, this would in turn require adding geolocation fields (the map just couldn’t find an observatory in the far north of Finland from the name of the town it was in, as an example), and also perhaps still adding some images via the individual pin on the map (the map couldn’t convert some urls into images and just added them as links to the bottom of the pin description—seemed to do just fine with embedding video urls).

- The kinda bad: I paid for Google Maps pro to allow us to put so many pins on the map and in the last week of the course they announced it will now be free. But it was only $5 a month and I can easily get my department to reimburse me the teaching expense of $20 + tax.